Why We Keep Using ChatLLM Despite Everything That's Wrong With It

This article is part of a series on our journey and how we at Another Cup of Coffee are adapting to Artificial Intelligence. Competing in a market dominated by larger agencies means we have to be smart and a little bit scrappy, willing to experiment with new tools and alternative ways of working.

Over the past two years, AI has become a crucial part of our strategy, helping us punch above our weight and deliver more value to our clients. In this post, I take a closer look at a tool that has become central to our workflow: ChatLLM from Abacus.AI.

ChatLLM has been an incredibly useful addition to our toolkit, but it's certainly not without its drawbacks. While Abacus.AI's pricing makes their offering compelling, there are many areas where it falls short, such as in poor documentation, almost no support, and unreliable implementation. Nevertheless, ChatLLM has been a valuable tool, and I'll dive into our experiences with it, covering both the positives and the frustrations.

This post has not been sponsored by Abacus.AI, but if you're interested in ChatLLM, we have a referral link for those who want to give it a try.

How AI Has Changed Our Work

Before I get into ChatLLM specifically, it's worth briefly mentioning how AI has helped our day-to-day work. We've found that with the right AI tools, we can take on projects that would normally need more people, and we spend a lot less time on boring repetitive tasks, such as data cleaning and processing.

The quality of what we deliver has gone up and clients get better value while we continue to keep our pricing competitive. There have been many cases where we've been able to offer insights that used to require bringing in highly-paid specialists.

For example, there have been a number of projects where we've been able to save our clients money by delivering close-to-final draft documentation and reports that required specialist expertise. Instead of immediately hiring costly third-party consultants, clients simply had our AI-generated drafts reviewed by their in-house counsel, significantly reducing professional fees.

We've also cut expenses by using AI assistants to rapidly code custom, single-purpose tools rather than purchasing expensive off-the-shelf software or subscriptions. Those costs can add up significantly, especially for small agencies like ours. Such bespoke utilities to solve specific one-time project challenges could not have been feasible without AI.

Being an early mover into AI integration has given us insight into the massive shifts coming to the workforce and to society in general. We have already started adapting our business and skills to thrive in this new environment so I'm now more confident about Another Cup of Coffee's future.

What We're Using

We've tried loads of AI platforms over the past couple of years. Some stuck around but many others didn't. Aside from ChatLLM, we regularly use a range of other tools including:

-

Augment* - Claude Code

-

Gemini CLI* - Google AI Studio

- Groq

- Julius

- Mistral's Le Chat

- napkin.ai

- NotebookLM

- Ollama with Open WebUI

- Replit

-

Windsurf*

October 2025 update: We've replaced a number of tools with Claude Code, which has proven superior.

They all have their specific use-cases but ChatLLM is definitely the one I personally reach for most often.

ChatLLM from Abacus.AI: What's Good and What's Not

What Is It?

As its name implies, ChatLLM is Abacus.AI's chat-based AI platform that gives you access to a bunch of different LLM models through one interface. You pay $10 US Dollars per user per month, and get access to the most popular models like ChatGPT, Claude Sonnet, Gemini and DeepSeek. Models are released so frequently that I haven't bothered to include the version numbers.

Abacus.AI doesn't just give access to multiple models. You get specialised tools like CodeLLM, their AI-assisted VS Code editor, and AI Engineer to help you build custom chatbots and AI agents.

Why We Like It

It's cheap. That's it. $10 a month for all those models is excellent value as we'd be paying over $50-$100 per month if we subscribed our most-used models individually. This means we can use the strengths of the different models rather than trying to make one model do everything.

We use it for all sorts of things: writing first drafts of client proposals; creating documentation or reports; helping debug and improve code; project planning; summarising project progress; and general brainstorming. It's not all great and the low price comes at other costs.

The Annoying Bits

Poor Documentation

The documentation is absolutely terrible, outdated and severely incomplete. Help pages casually reference features and interface elements without any explanation, expecting you to be familiar with their terminology for the various screens and settings. I've wasted so much time trying to figure out basic stuff that should be clearly explained.

No Customer Support

Customer support is also non-existent. I've sent questions about specific features and they go into a black hole. Good luck to you if you encounter problems. I suspect they're putting all their effort in supporting enterprise clients. If you're a small or medium-sized business, this will no doubt make you wonder if this is a solution you can trust with production-grade tasks. Right now, we don't use it for any client-facing solutions and a big reason is lack of customer support.

Terrible Interface

The interface is horrible too. It feels like it was designed by engineers who just bolted on features and stuck them in weird places. Aspects such as their version of the ChatGPT Playground are a complete kludge. You can't expect the kind of seamless interaction with your work that's available on ChatGPT's user interface.

CodeLLM - A Waste of Time?

CodeLLM is Abacus.AI's answer to AI-assisted code editors like CoPilot, Cursor and Windsurf. However, it feels like someone's half-hearted attempt at a side project, thrown together after hours and released, then forgotten. I won't even bother listing its problems and I recommend not wasting your time even trying it. Maybe CodeLLM will get better over time but I really don't see why it's available at all. Stick with the more well-known options.

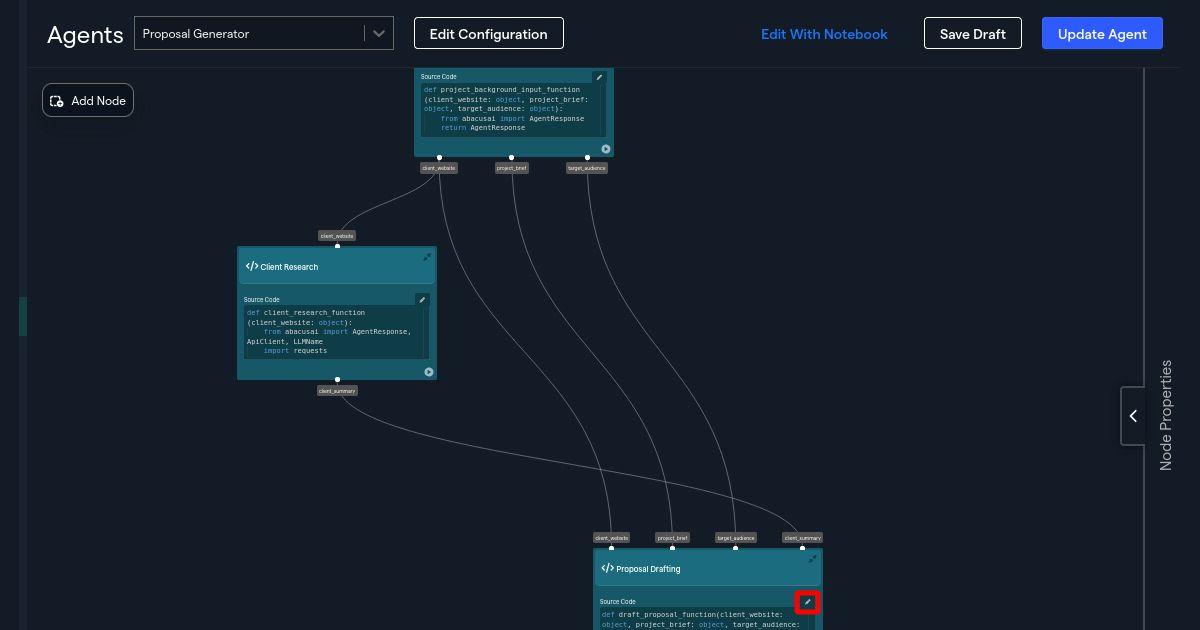

Unreliable Custom Agents

When this review was first posted in January 2025, I mentioned my love-hate relationship with the custom agent feature. Back then, I found it useful for building agents to handle tasks we do regularly, such as generating documents, manipulating our back-office financial transactions, or providing chat access to local knowledge bases. I also mentioned problems with poor documentation and unexpectedly burning through credits using the AI Engineer feature.

Now I've stopped using it altogether because they've become unreliable. For some reason, previously working agents will hang, producing no output, or spit out an error. I did try contacting Abacus.AI support but there was no response or even acknowledgement that they'd received my message.

Unclear Pricing and Service Limits

You'd think that at $10 per user per month, you'll have a good idea of what you'll be paying. But that's not the case. Each user is allocated 2,000,000 compute points per month. What exactly that means is not at all clear. Here's Abacus.AI's explanation:

"Compute points are NOT TOKENS. They are simply a measure of usage of ChatLLM. With 2M compute points, you will be able to send 50,000+ messages on some LLMs in a month. 1,000,000 (1M) compute points can be as much as 70,000,000 (70M) tokens on some LLMs."

OK, so 50,000+ messages sound a lot and most months we're well within the limit. However, a few times I managed to burn through most of my compute points in a couple of afternoons of using AI Engineer. How? No idea.

New Features (mid-2025)

Abacus.AI has added a few more features to ChatLLM since I originally wrote this post:

- DeepAgent: an autonomous agent that can help you with tasks like research, planning or building apps.

- Tasks: a scheduling and automation utility designed to run AI-powered actions at certain times, intervals, or triggers.

- Apps: no-code and full-code methods for launching apps.

- Projects: a feature to organize chats, files, and workflow instructions into folder workspaces.

- RouteLLM: a routing mechanism to send your queries to the best underlying language model.

Because of my poor experience with ChatLLM Custom Agents, I really haven't bothered to try out DeepAgent, Tasks or Apps. These new features may be worth some experimentation if I were a reviewer or YouTube AI influencer, but my focus is on getting work done. When you're running a business, you rarely have time to play with new things, and I feel that Abacus.AI wasted my time with Custom Agents.

Projects and RouteLLM are different though. They're built-in improvements to the chat interface and are probably my two most favourite ChatLLM additions so I'll spend a bit of time talking about them.

ChatLLM Projects

This feature is exactly like ChatGPT Projects and there's not much explanation needed here. You can organise chats into folders for easy reference. They're perfect for working on different areas within your business. In some ways, it has replaced ChatLLM's flaky Custom Agents implementation for my needs.

I'll have different 'agents' in project folders where I copy and paste prompts from old chats to get certain types of work done. For example, I'll have projects for proposals, coding tasks and client needs, like contract review or support requests. Each project can have its own custom instructions and files for context. This is perfect if you want to quickly create a task-specific AI without having to mess around with building an actual agent.

RouteLLM

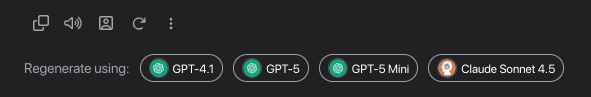

When Abacus.AI first deployed RouteLLM, it was just one model among many alongside GPT, Claude, and Gemini. Now it's the default option. Your chats will go through RouteLLM unless you manually set your model.

It does a fairly good job of routing your chat to the right model but seems to favour OpenAI's GPT-5. This could be for my own use-cases though. It will sometimes inappropriately route queries to Gemini 2.5 Flash, and I suspect this is Abacus.AI trying to reduce their API costs.

But what's really great is the Regenerate using option. If you're not quite happy with a response, or you want to see how a different model will respond, just click the model button underneath. This is a massive time-saver. The alternative would be to switch your session to another model provider or use yet another service like Boxchat or chatplayground.ai.

Is ChatLLM Worth the Price?

Despite all those frustrations, ChatLLM is the AI tool we use most, and this is still the case many months after originally posting this review. The value it provides is just too good to ignore. We've become used to its shortcomings and found ways around its poor interface.

I've been tempted to re-subscribe to ChatGPT or Claude whenever they release a new model but stopped to think: is it worth the extra expense when they'll be available on ChatLLM within days?

If you're a small agency or freelancer and you can put up with some friction in exchange for powerful capabilities at an affordable price, ChatLLM is definitely worth looking at. Just don't think you'll get anywhere near a polished experience or helpful support for your $10.

Being Transparent

I should mention that Abacus.AI is active in advertising through content creators, especially on YouTube. We haven't received anything for this review and I'm simply sharing our experience as paying customers. I genuinely find the tool useful and worth recommending, despite its many problems.

Give It a Try

If you're curious about what ChatLLM might do for your business, I think it's worth trying for the price of a couple of cups of coffee. Use our referral link here. (We'll get $5 if you sign up but they don't say what you'll get. Probably nothing.)

I certainly won't call myself an Abacus.AI fan, but I do think other small agencies and freelancers might benefit greatly from this tool, warts and all. For us, the trade-offs have been worth the price and like any tool, what matters is whether it fits your specific needs and how you work. ChatLLM definitely isn't perfect, but it's been really useful for us so for the price, you'll lose very little to find out if it works for you too.

Use our referral link to try ChatLLM.

You may also like

Drupal to WordPress Migration Guide

In this guide, you'll find insights drawn from almost 15 years of specialising in complex Drupal to WordPress migration projects. I'll walk you through the entire migration process, from the initial evaluation to post-launch considerations.

Still Alive: A Micro Agency's 20 Year Journey

This article will be the first in a series where I'll share how Artificial Intelligence has reshaped how we operate at Another Cup of Coffee.

Secure Your AI Workflow Using Local Tokenisation

Don't leak confidential client data when using cloud-based LLMs. Secure your AI workflow with local tokenisation using PaigeSafe.